In the second of his two-part blog post, Dan Muir, Senior Economist at Youth Futures Foundation and past Data Impact Fellow, discusses what impact evaluation is and why it matters.

In the second of his two-part blog post, Dan Muir, Senior Economist at Youth Futures Foundation and past Data Impact Fellow, discusses what impact evaluation is and why it matters.

In part one of this post I described what impact evaluation is, and what we need to set up to start to do this when looking at specific interventions.

There’s lots of other theoretical details that you can get stuck into, but let’s move on to a quick summary of what the different impact evaluation methods available are.

Randomised Controlled Trials

Firstly, we have Randomised Controlled Trials (RCTs). These are seen as the gold-standard of impact evaluation. This is the key method following the first approach to developing a counterfactual mentioned in the first post.

The idea with RCTs is that by taking a group of similar individuals that could participate in an intervention and then randomly assigning them to either experience the intervention or not, you should be able to satisfy all of the key criteria of a good counterfactual. When done with a sufficient number of people, you can be confident that following random assignment, the two groups developed are similar in characteristics (and you can also test for this by comparing those characteristics that are observable in whatever data you collect between the two groups), and by this it can be assumed that they would have reacted to the intervention in the same way.

As an example of what an RCT looks like, the health-led employment trials were a series of RCTs used to evaluate Individualised Placement and Support, a form of employment support for individuals with health conditions that act as a barrier to them entering work.

Whilst RCTs are held upon high in the impact evaluation world, they have key limitations.

On the point that they produce a good counterfactual, you will need to check that the two groups have similar exposure to other interventions – you may specify in the trial’s design what interventions the comparison group does experience to account for this, but enforcing compliance with this can be tricky, and restricting access for the control group to other interventions as part of the trial design might not be ethical. It can also be really challenging to introduce the practical randomisation element.

Additionally, you may need to account for observational effects – for instance, the Hawthorne effect occurs when through the process of being watched as part of an experiment, the control group acts differently, which affects the validity of the counterfactual.

Quasi-Experimental Designs

Because of the various challenges with them, using a RCT to evaluate policy is not always possible. Thinking about the Youth Guarantee for instance, unless this is built into the delivery of the programme, it wouldn’t be ethical to restrict the control group to access to what is to be a universal policy amongst 18-21 year-olds in order to perform the evaluation. In case like this, Quasi-Experimental Designs (QED) can provide an alternative option.

This is a family of impact evaluation techniques that follows the second approach to building a counterfactual – exploiting a pre-existing group of individuals that are not treated by the intervention based on ineligibility or choosing not to. This group of techniques are inherently less robust that RCTs because the reasons why an individual chooses not to participate or is not eligible to participate might also lead to a difference in their outcomes, affecting the quality of the counterfactual.

These reasons, or characteristics, can be observable – this is typically the case when the comparison group is formed because of ineligibility.

Thinking about the Youth Guarantee, as only 18-21 year-olds are eligible to participate, the outcomes of 22 year-olds that can’t participate could be compared to those of 21 year-olds that can and do participate to provide a fairly close comparison. But these characteristics can also be unobservable – this is typically the case when the comparison group is formed out of choosing not to participate. This can lead to the introduction of selection bias – this occurs when the reasons as to why someone chooses to do something might also affect their outcomes.

So for instance, if a less motivated 21 year-old chooses to not participate in the Youth Guarantee and is used to build the counterfactual of a highly motivated 21 year-old that does participate, their lack of motivation might mean that they are less likely to be in work, or good work, or being paid highly, than the highly motivated young person even in the counterfactual world where the Youth Guarantee wasn’t introduced.

The potential for these issues to exist always needs consideration when thinking about performing a QED.

Regression Discontinuity Design

So what specific types of QED are there?

One option is what is known as a Regression Discontinuity Design (RDD). These occur where eligibility to participate is based on some type of scoring system.

Think for instance of additional support provided in schools to students that are falling behind in class – if these students are selected based on their grades, the additional support they receive could be evaluated in this way. The way an RDD works is it compares the outcomes of individuals that fall just either side of the score threshold that dictates whether the individual receives the support or not.

So for instance, suppose that all students scoring below 50% in a mock exam will receive additional support – you could compare the outcomes of students that score 49% on the mock with those that score 50%. Given that you would expect these students to have pretty similar outcomes in a world without additional support (on any given day, its fairly random as to whether someone scores 49% or 50% on a test), any difference in scores on the end of year test between those that do and do not go on to receive this additional support can be ascribed to this intervention.

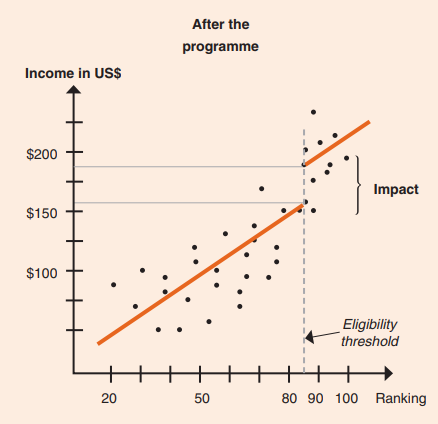

See below a visualisation, again from the ILO, providing an example of what this might look like – in this example, the eligibility threshold occurs at a ranking of 85 (whatever that means here!), with there then being a discontinuity in outcomes (i.e., income in US$) which can only be explained by whatever intervention it is that is being evaluated. As a real work example of an RDD in the youth employment space, see this evaluation of the New Deal for Young People.

Image from ILO Guide on Measuring Decent Jobs for Youth

Instrumental Variable

Another potential impact evaluation technique is known as an Instrumental Variable (IV) approach.

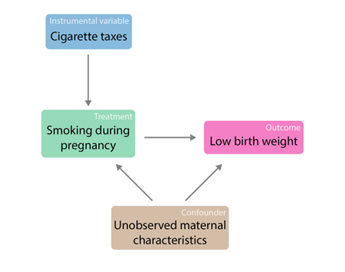

This is used where whether someone experiences the intervention or not is endogenous i.e., factors affecting whether someone experiences the intervention also affect their outcomes. An IV approach is possible when an instrument Z exists which affects whether or not an individual experiences intervention W and thereby outcome X, but is not related to the confounder Y.

The diagram below gives an example of this – suppose we wanted to understand whether smoking during pregnancy affects the child’s birth weight (clearly an important public policy issue). However, the child’s birth weight will also be affected by a range of other factors which in turn affect the likelihood of the mother smoking during pregnancy – for example, any work-related stress factors affecting the mother. So in order to estimate what causal impact smoking during pregnancy has on the child’s birth weight, an evaluation approach could exploit the introduction of cigarette taxes – this will affect whether or not the mother smokes during pregnancy due to the increased cost of doing so, but is not related to whether or not they experience any work-related stress factors.

By isolating changes in treatment status due to the instrumental variable, we can isolate the direct link between treatment and the outcome, and remove any influence of the confounder. As a real world example of an IV approach, see this evaluation of Work First Job Placements.

Difference-in-Difference

If outcomes are measured before and after an intervention are introduce, a Difference-in-Difference (DiD) approach might be possible.

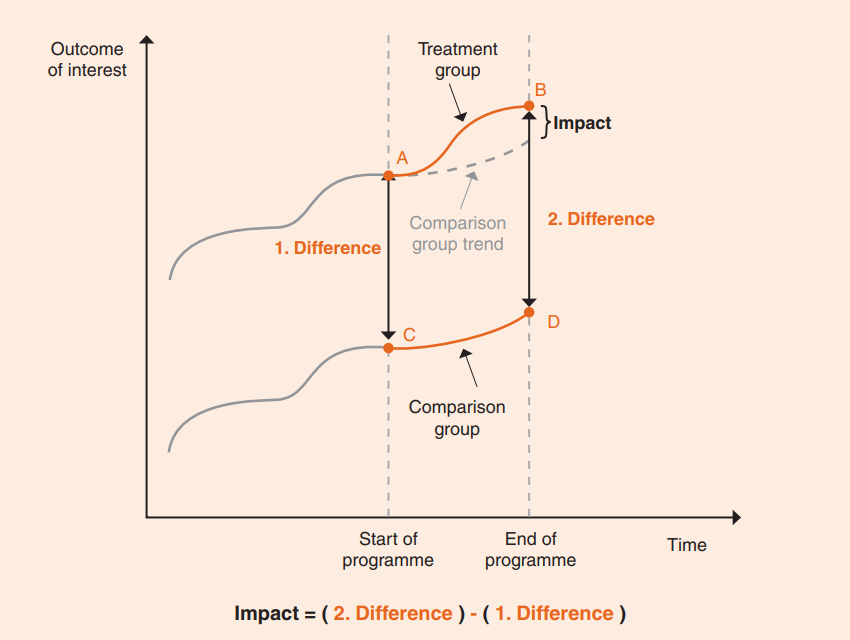

This approach says that, should we be able to assume that the outcomes of the individuals that go on to form the treatment and comparison groups would have followed the same trend had the policy not been introduced, and change in the difference in their outcomes from before to after the policy was introduced can be assigned to the policy itself. See again below a visualisation from the ILO of the idea behind this technique, and as an example of a DiD approach in the youth employment space, see this evaluation of the New Deal for Young People.

Image from ILO Guide on Measuring Decent Jobs for Youth

Propensity Score Matching

And the last key impact evaluation technique I’ll touch on here is Propensity Score Matching (PSM).

This can be used when whether or not an individual participates in an intervention is determined by observable characteristics. Where this is the case, you can match individuals that participate with those that don’t who based on their characteristics and whether these translate to a similar estimated probability of participating. A real world example of this in the youth employment space, see this evaluation of the Youth Contract.

All of the QED approaches outlined above have limitations but this is the technique that in isolation is perhaps most easily challenged, should for instance selection bias like that outlined previously with regards to the lazy and highly motivated young person exist that isn’t captured in the data.

Why does impact evaluation matter?

Performing an impact evaluation of public policies that are introduced is a critical endeavour.

It is vital to understand whether we are investing public money in policies that will have an impact. The findings from an impact evaluation can then be used to inform an economic evaluation of the same policy, which seeks to assign a monetary value to the outcomes from the policy to the participant, the Exchequer and Society, and then compares these to the cost of delivering the policy. This helps us to understand whether the investment in the policy provided value for money.

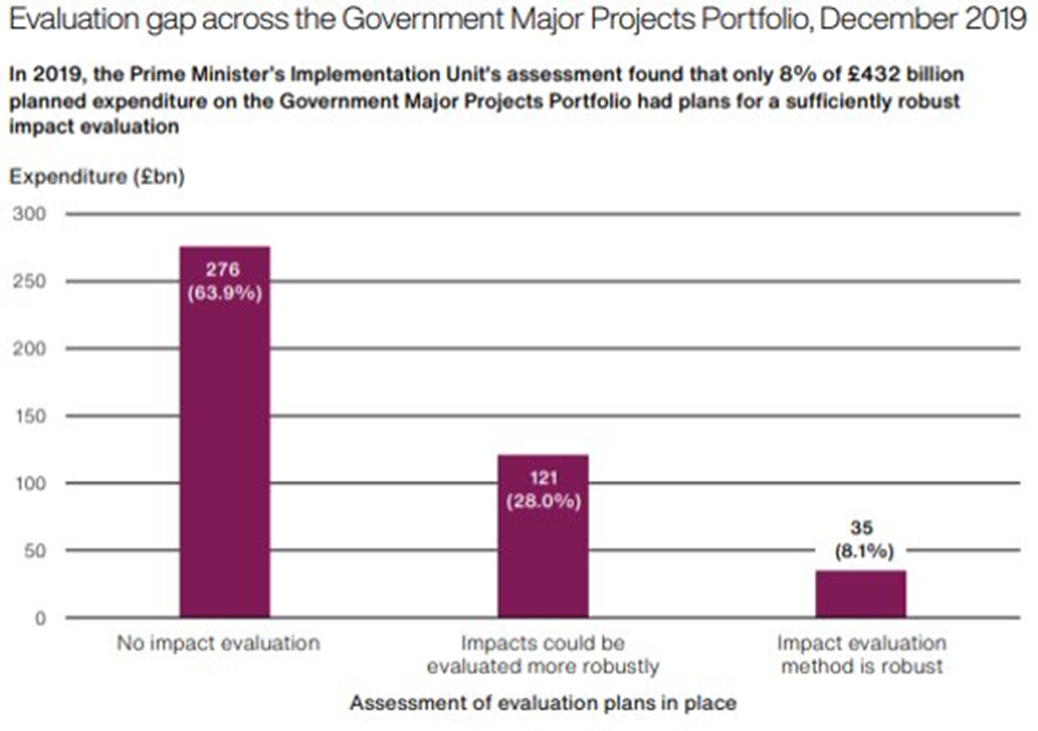

Despite their importance, a large amount of public money is still spent on policies that are not subjected to an impact evaluation – approximately 64% of £432 billion’s worth of planned expenditure in the Government Major Projects Portfolio in 2019 was not to be evaluated by an impact evaluation, with a further 28% of expenditure subjected to impact evaluations which could have been done better.

With the recent change in government, and early signs coming from Labour that they will take an evidence-based approach to making decisions, hopefully this signals a re-focussing of public policy on that which through impact evaluations has been demonstrated to work.

About the author

Dan was part of the 2023 cohort of Data Impact Fellows and works as a Senior Economist at Youth Futures Foundation. In this role, he is working to develop a programme of Randomised Controlled Trials with employers to assess how recruitment and retention practices can be changed to support the outcomes of disadvantaged groups of young people. His main research interests include unemployment and welfare, low pay, and skill demand and utilisation.